Boolean functions, sometimes also called switching functions, are functions that take as their input zero or more boolean values (1 or 0, true or false, etc.) and output a single boolean value. The number of inputs to the function is is called the arity of the function and is denoted as k. Every k-ary function …

Tag: binary

Sep 21 2017

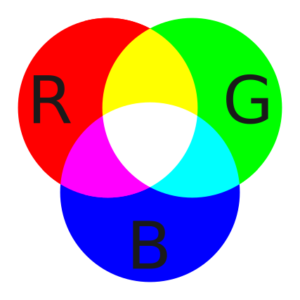

An Introduction to the RGB Color Model

If you are an artist, photographer, graphic designer, or web developer, having a firm understanding of colors is a necessity. Key to being able to study and discuss colors is a formal framework for quantizing their properties. Abstract mathematical models called color models do just this, allowing people to discuss the qualities of a color …

Jan 29 2017

The Method of Complements

Abraham Lincoln once famously said, “Everybody loves a compliment.” I suspect that if he had been a mathematician he would have loved complements, too. We’ve already seen what complements are and talked about the two most prolific: the radix complement and the diminished radix complement. Now it’s time to explore how we can leverage complements …

Jan 10 2017

Number System Complements

In my last post about binary signed integers, I introduced the ones complement representation. At the time, I said that the ones complement was found by taking the bitwise complement of the number. My explanation about how to do this was simple: invert each bit, flipping 1 to 0 and vice versa. While it’s true that …

Jul 26 2016

Binary Signed Integers – Ones Complement

In the last post, we saw that one of the major failings of the signed magnitude representation was that addition and subtraction could not be performed on the same hardware as for unsigned integers. As I pointed out, the reason for this is because negating a number in signed magnitude does not yield the additive …

Mar 09 2016

Unsigned Binary Integers and Internal Congruence

This is going to be another one of my “selfish” posts – written primarily for me to refer back to in the future and not because I believe it will benefit anyone other than me. The idea is one that I always took for granted but had a hard time proving to myself once I decided …

Feb 01 2016

Binary Signed Integers – Signed Magnitude Shortcomings

I previously discussed the signed magnitude solution to representing signed integers as binary strings and pointed out that while it had the advantage of being simple, it also has some disadvantages. For starters, N-bit signed magnitude integers have two representations for zero: positive zero (a bitstring with N zeros) and negative zero (a bitstring with …

Nov 18 2015

Binary Signed Integers – Signed Magnitude

We humans and our meat computers don’t have any trouble recognizing the sign of a number. If there is a minus sign, “-,” in front of a number, that number is negative. If a number is prefixed by a plus sign, “+,” or, the more likely case, has no prefix at all, then the number …