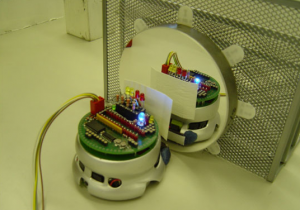

credit: Junichi Takeno

The Discovery Channel has a story today about researchers from the Meiji University in Japan that have created a robot able to tell the difference between looking at its own image reflected in a mirror and looking at an identical robot. The research, led by Junichi Takeno, is a big advance towards understanding human consciousness and emotions and ultimately creating self aware, emotive robots.

According to Takeno, people not only learn behavior during cognition, or information processing, but they also learn to think, or process information, while performing some behavior. Because of this complex, recursive relationship between thinking and acting, a robot designed to be self aware needs an area of its neural network that is common to both processes.

The robot designed by Takeno and his team utilizes such a neural network, and they developed a unique signaling system to prove that it works as intended. Several LEDs of different colors were connected directly to the artificial neurons in the robot’s brain and light up depending on the neural processing that is taking place. The red LEDs light when the robot is performing its own behavior. If it sees another robot do something, the green LEDs light. Finally, and most importantly, a single blue LED lights when the robot sees another robot perform an action, and it imitates that action. The researchers believe the process of imitation is evidence of consciousness because during imitation, the robot both recognizes behavior in a second robot other than itself and transfers that behavior to itself.

The team proved their robot was capable of some degree of self-recognition by performing two experiments. In the first experiment, a robot, the “self” robot was placed in front of a physically identical “other” robot. As the other robot moved the self robot mimicked its actions and the blue LED lit indicating that the self recognized its imitation of the other.

When the self robot was placed in front of a mirror and began moving, the blue light flashed only 30 percent of time. So even though during this second experiment the self robot could possibly recognize its own image as a separate robot, the researchers found that 70% of the time it knew it was looking at itself. The goal now is to optimize the neural network and algorithms so that the robot is able to recognize itself 100% of the time.